The Great ADAS Debate: Can Camera-Only Systems Truly Deliver Autonomous Driving?

As automotive technology continues to evolve, more and more cars are equipped with Advanced Driver-Assistance Systems (ADAS) that use cameras, sensors, and other technologies to help drivers stay safe on the road. However, relying on camera-only systems for ADAS technology can lead to dangerous situations on the road. So, can ADAS camera-only systems be enough to keep drivers and passengers safe and enable autonomous vehicles? The short answer is: Not yet!

Sensor fusion in ADAS systems

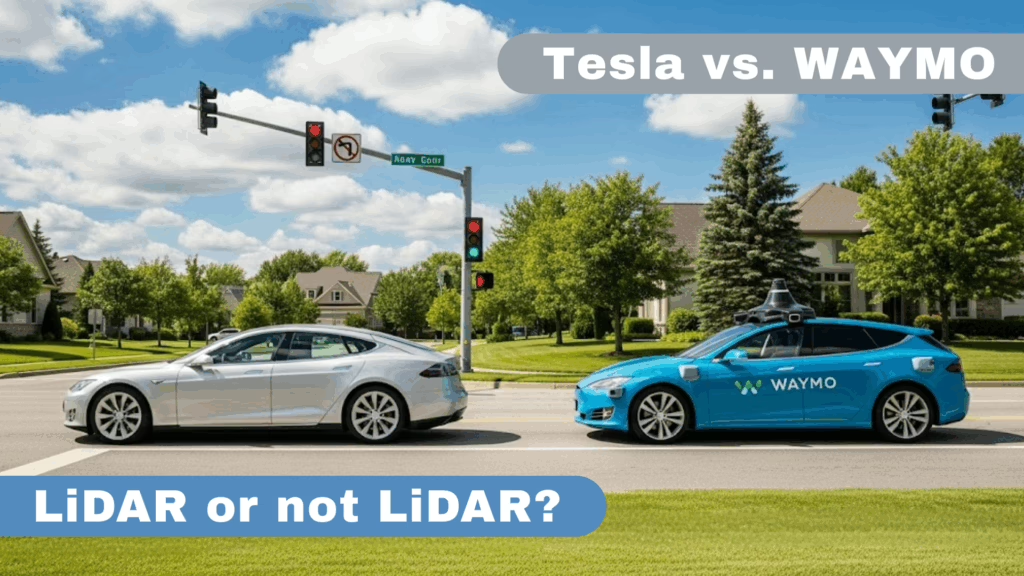

Tesla, for example, has made headlines for its plan to develop ADAS systems based solely on camera technology. However, this approach is controversial, as many experts argue that combining camera technology with other sensors, such as radar and lidar, is essential for ensuring the safety of ADAS systems. Indeed, while cameras are an integral part of ADAS technology, their present limitations can result in a lack of accuracy, poor performance in adverse weather conditions, and limited visibility.

When ADAS cannot see

In recent times, driving cameras have faced issues such as accidents caused by Tesla’s Autopilot system. These accidents can occur due to the inability of ADAS (Advanced Driver Assistance Systems) to detect obstacles in certain situations. For instance, in heavy rain, fog, or snow, the camera-only system can suffer from obstructed views, leading to accidents. Although cameras are crucial in feeding data to the AI (Artificial Intelligence) driving the car, camera-only systems must overcome their limitations before fully autonomous vehicles can be deployed on the road.

Current workaround to fix ADAS’s problems

To overcome the limitations of camera-only Advanced Driver Assistance Systems (ADAS), the current solution is to integrate them with other sensors. This combination can provide additional information about the vehicle’s surroundings, thus improving the overall effectiveness and safety of the ADAS systems.

The future of ADAS

In the future, advancements in camera technology hold promise for further improving the safety of ADAS systems. One potential solution is to develop cameras that mimic the functionality of the human eye vision system. This would involve using multiple cameras to provide a wider field of view and a more accurate representation of the environment. Like how our eyes work together to provide depth perception and a wider field of view.

Moreover, developing camera technology that can adapt to lighting and weather conditions while providing better resolution and contrast could improve ADAS systems’ safety. These advancements could help cameras detect hazards and objects more accurately and quickly, improving road safety.

What next with EYE-TECH technology

EYE2DRIVE supports the evolution of autonomous vehicles and ADAS technologies by proposing a technology that mimics human eye flexibility, adapting its response to environmental and illumination conditions. The result is images full of content, ready to be processed by AI algorithms to make only-cameras ADAS systems reliable and safe.